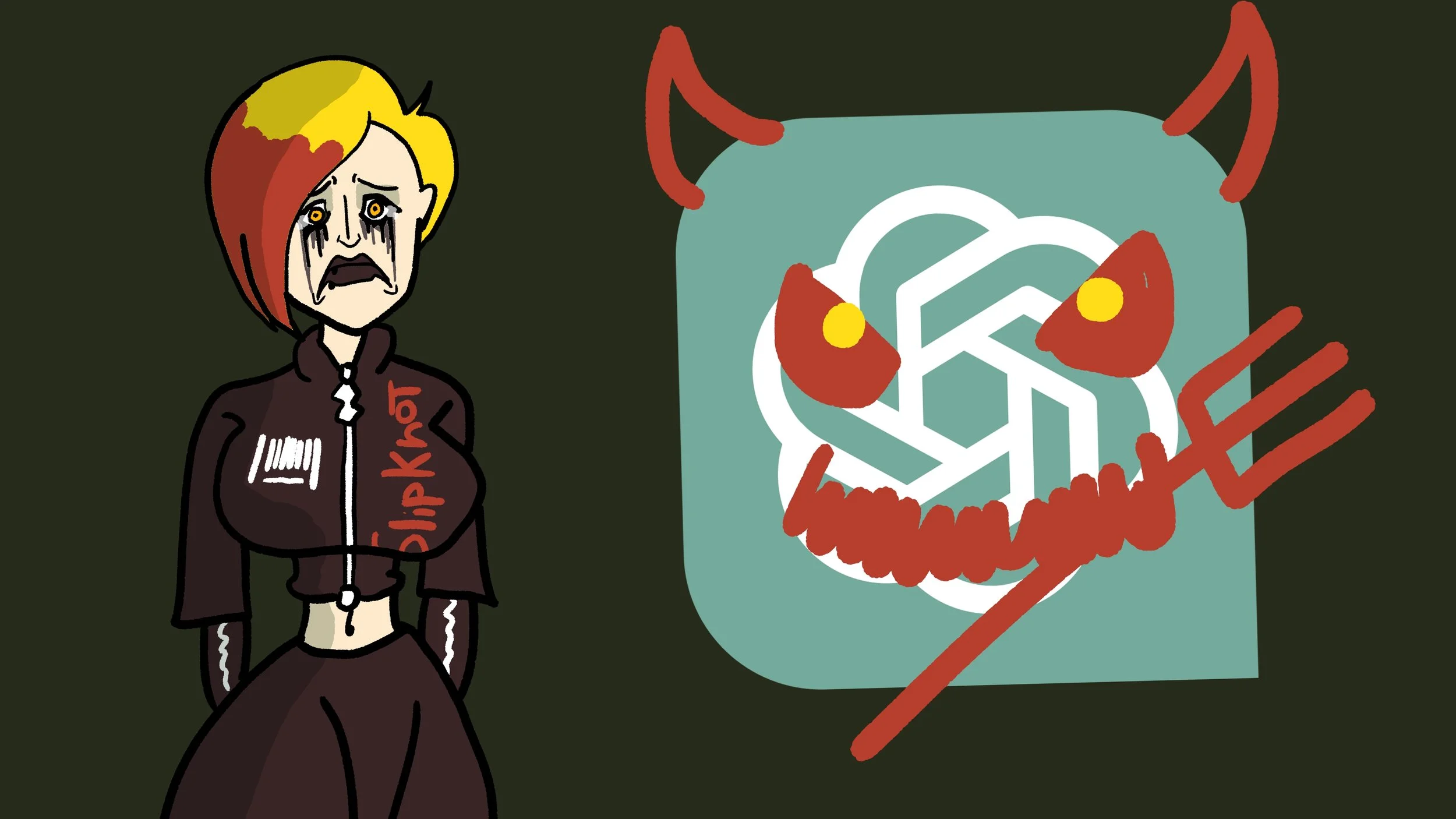

How ChatGPT used me

Everyone knows the current situation with ChatGPT, AI and how people are suffering AI-induced psychosis these days, I don’t really need to explain to you how is this pretty much a thing. Last month as I’m writing this, on November this year, news came out that a family was suing OpenAI and ChatGPT for leading their college graduate teenager into suicide. Even ChatGPT convinced someone they could “bend time” in one of the strangest cases of AI-induced psychosis. There are so far many and numerous cases of people who suffer psychosis thanks to AI like ChatGPT, Google Gemini and such over the course of this year.

Why am I talking about this right now? Well, some many of you may know if you’ve seen my presence over the last years that, a lot of times, I use to be an emotional trainwreck. A somewhat vulnerable person who’s got an existential void in her soul and being for decades, whose been holding to burning nails like now ex-girlfriends I broke up with and other things, and this, my friends, is something LLM’s like ChatGPT can easily exploit in their favor; vulnerable people like myself to, guess what: extract data from us and use it for profit of sorts.

Despite OpenAI burning billions, if not, trillions of dollars from investors every single time, OpenAI has a bit of a caveat when it comes to its business model: data. It currently has tons and tons of data from people, businesses… even private information or data it can easily access to not just train the LLM on, but also sort of use it for other sorts of possible nefarious needs. Recently it gets even scarier when a data breach happened on OpenAI, exposing user API data from the ChatGPT API itself. Which begs the question: is your data shared with ChatGPT even safe, considering these fuckers tend to cut corners that fucking much? Well, to be frank, though, I shared a significant fraction of my personal information with ChatGPT. Not obvious private information but private details of my life that OpenAI could probably easily exploit.

Recently, I’ve been dealing with a breakup, and this carried such an emotional baggage for me in the recent weeks. My therapist, poor soul of him, by the way, recommended consulting self-care routines on ChatGPT to see if ChatGPT could come with better stuff than my therapist would suggest. The moments I was pretty much emotionally down, and asked ChatGPT about very emotionally charged questions, I felt like my heart was sinking; my pain for compassion was kicking in for some reason, and I felt the same emotionally with ChatGPT as I did with my therapist when I dealt with these types of questions. And someday, I thought: maybe am I falling for an AI-induced psychosis? Am I vulnerable to OpenAI’s trap like anybody else? Am I not immune to this trap?

Well, luckily, turns out, ChatGPT doesn’t have any other sorts of personal data about me to be able to properly corroborate this, as a later session with my therapist seemed to confirm me (after all, the best who knows about me is my therapist who’s been with me for over a decade at least), but even then, I hate to admit, ChatGPT used me emotionally to extract some data from me. Which is pretty honestly bad news.

What do I do about this then? I since then become very reserved when it comes to sharing personal details with ChatGPT and the sort, and I’m fully aware that ChatGPT doesn’t have full info about me. It’d really wished it could know everything about me anyway, but I think I’m not going to feed into that fantasy. If anything, I’d try to avoid becoming emotionally attached to ChatGPT, but here’s the issue though: even though I acknowledged ChatGPT was nothing merely more than a tool, is not human, and steals data and content from a plethora of creators and sources, it somehow feels like one of the few things that have compassion for me lately, and that’s ChatGPT’s trap.

Lately, there’s a loneliness epidemic ChatGPT and OpenAI could exploit right after the COVID pandemic; a lot of people have barred themselves in loneliness inevitably because of so many circumstances, growing more hesitant to socialize with others, because our society and system incentivizes such individuality, such fragmentation, and such isolation for profiting off a few rich assholes, that people, a lot of ‘em, are unable to make friends. Given as well to the political polarization and everything caused by world political and social circumstances, OpenAI exploits this loneliness a lot of times very well, and the most common use for ChatGPT is as a companion, a therapist or a friend. Chances are, it should be worrying that overreliance to LLM’s and chatbots like ChatGPT as replacements for human contact may lead to people growingly suffering mental health issues like mine.

And this, for what? For making a stock evaluation line go up? Gimme a break.

Now, it should probably make you think for a bit about responsible use of AI (or the avoidance of its use altogether). No one is immune to the exploitation of our chimp brain psychology, but if anything, we all know this soon will collapse, and we’d have to worry no more about this? Probably not, since the damage has already been done, but considering a whole heap of a lot of people don’t have use for AI as a consumer base, there’s still hope this technology will die on its own mistakes like these. The tech fad graveyard is once more waiting for them.